As artificial intelligence (AI) continues to transform various industries, a critical challenge is emerging—AI-generated content becoming a threat to AI itself. This paradoxical problem, where AI’s output potentially degrades the quality of future AI systems, is the focus of a recent article in the New York Times. In his article, Bhatia explores the implications of AI systems training on data increasingly contaminated by their own output, a phenomenon that could lead to a significant decline in AI performance over time. Here is an overview of the article, aiming to offer a comprehensive understanding of this issue.

The Rise of AI-Generated Content The internet is rapidly filling with AI-generated text, images, and other forms of content. Sam Altman, CEO of OpenAI, revealed that the company generates around 100 billion words daily—equivalent to a million novels. This vast amount of content inevitably finds its way onto the internet, whether as restaurant reviews, social media posts, or even news articles.

However, the pervasive nature of AI-generated content poses a significant challenge: it is becoming increasingly difficult to distinguish between human-created and AI-generated material. NewsGuard, an organization that tracks online misinformation, has already identified over a thousand websites that produce error-prone AI-generated news articles. The difficulty in detecting AI content raises concerns not only about the quality of information online but also about the future of AI development itself.

The Feedback Loop Problem AI systems are typically trained on vast amounts of data sourced from the internet. As AI-generated content proliferates, these systems are at risk of ingesting their own output during training. This creates a feedback loop where the output from one AI becomes the input for another, leading to a gradual decline in the quality of AI-generated content.

Bhatia highlights a critical issue: “When generative AI is trained on a lot of its own output, it can get a lot worse.” This problem is akin to making a copy of a copy, where each iteration drifts further from the original. Over time, this process could lead to what researchers term “model collapse,” where the AI’s ability to generate diverse and accurate content deteriorates significantly.

The Consequences of Model Collapse The concept of model collapse is illustrated through various examples in Bhatia’s analysis. For instance, a medical advice chatbot trained on AI-generated medical knowledge might suggest fewer diseases matching a patient’s symptoms, leading to inaccurate diagnoses. Similarly, an AI history tutor could ingest AI-generated propaganda, losing its ability to distinguish between fact and fiction.

Bhatia references a study published in Nature by researchers from Britain and Canada, which demonstrates how AI models trained on their own output exhibit a “narrower range of output over time”—an early stage of model collapse. The study found that as AI models continue to retrain on their own content, their outputs become less diverse and more prone to errors. This reduction in output diversity is a telltale sign of model collapse and could have far-reaching implications for the future of AI.

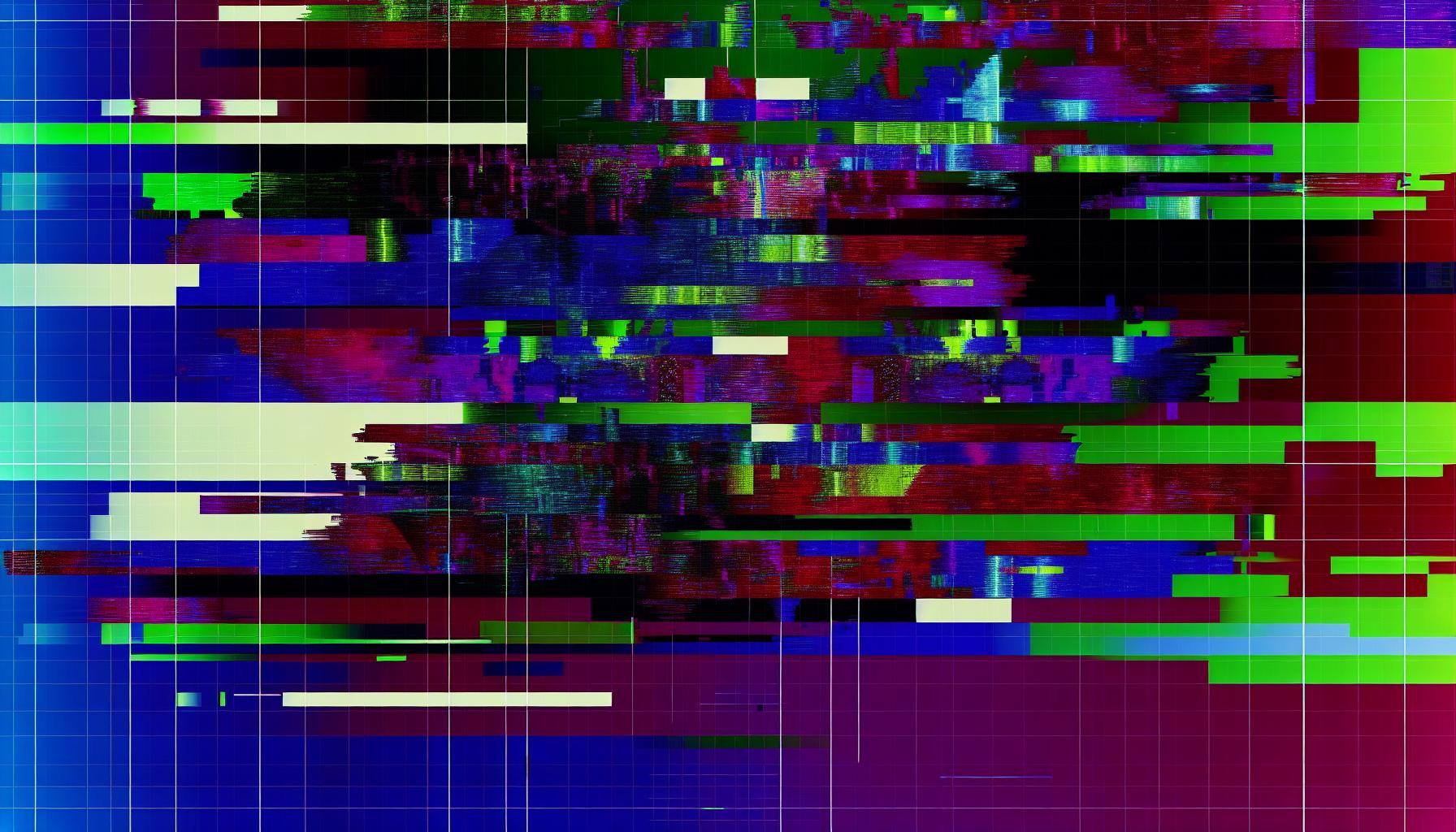

Degenerative AI: A Broader Issue The problem of AI feedback loops extends beyond text generation. Researchers at Rice University explored the effects of repeated training on AI-generated images. Their findings reveal that when image-generating AIs are trained on their own output, they begin to produce distorted images, complete with glitches, wrinkled patterns, and mangled features.

This degeneration in image quality underscores the broader issue at hand: AI-generated data is often an inferior substitute for human-created content. While some differences are easily noticeable—such as AI-generated images with too many fingers or chatbots spouting absurd facts—the subtler deviations can be just as problematic. These hidden discrepancies contribute to the gradual decline in AI performance as it becomes increasingly difficult for these systems to replicate the diversity and accuracy of human-generated data.

The Underlying Causes of Model Collapse At the heart of model collapse is the nature of AI training itself. Generative AI models are designed to assemble statistical distributions based on the vast amounts of data they consume. These distributions predict the most likely word in a sentence or the arrangement of pixels in an image, allowing the AI to generate content that mimics human output.

However, when AI models are retrained on their own output, these statistical distributions become skewed. The range of possible outputs narrows, leading to a concentration of similar, less accurate results. This phenomenon was observed in a study where AI-generated digits became less distinct with each generation, eventually collapsing into a single, indistinguishable output.

The Implications for AI Development The rise of AI-generated content and the subsequent risk of model collapse have profound implications for the future of AI development. As Bhatia notes, “AI-generated words and images are already beginning to flood social media and the wider web.” This influx of synthetic data is not only contaminating the datasets used to train new AI models but also increasing the computational power required to maintain AI performance.

Researchers at New York University (NYU) have found that as AI-generated content becomes more prevalent in training datasets, it takes more computing power to train AI models. This increase in computational demands translates into higher energy consumption and costs, making it more challenging for smaller companies to compete in the AI space.

The Hidden Dangers of Reduced Diversity One of the most concerning aspects of model collapse is the erosion of diversity in AI outputs. As companies attempt to mitigate the glitches and “hallucinations” that occur with AI-generated content, they may inadvertently contribute to a reduction in diversity. This issue is particularly evident in AI-generated images of people’s faces, where the loss of diversity can have significant social implications.

The decline in linguistic diversity is another area of concern. Studies have shown that when AI language models are trained on their own output, their vocabulary shrinks, and their grammatical structures become less varied. This loss of linguistic diversity can amplify biases present in the data, disproportionately affecting minority groups.

Potential Solutions to the Feedback Loop Problem The research outlined by Bhatia suggests that the key to preventing model collapse lies in maintaining high-quality, diverse training data. AI companies may need to invest in sourcing human-generated content rather than relying on easily accessible data from the internet. This approach would ensure that AI models are trained on accurate, diverse data, reducing the risk of degeneration over time.

Moreover, developing better methods for detecting AI-generated content could help mitigate the feedback loop problem. Companies like Google and OpenAI are already working on AI “watermarking” tools, which introduce hidden patterns into AI-generated content to make it identifiable. However, challenges remain, particularly in watermarking text, where detection can be unreliable.

Another potential solution involves curating synthetic data with human oversight. By ranking AI-generated content and selecting the best outputs, humans can help prevent the decline in AI quality. This approach could be particularly useful in specific contexts, such as training smaller AI models or solving mathematical problems where the correct answers are known.

A Call for Vigilance in AI Development As AI continues to advance, the issue of AI feedback loops and model collapse cannot be ignored. The research highlighted by Bhatia underscores the importance of maintaining a diverse and high-quality dataset for AI training. Without careful oversight, the very tools designed to enhance our lives could degrade in quality, leading to unintended consequences.

For now, there is no substitute for real, human-generated data. As Bhatia aptly concludes, “There’s no replacement for the real thing.” AI companies must remain vigilant in their efforts to safeguard the integrity of their models, ensuring that the future of AI is one of continued progress rather than gradual decline.